Paper (Arxiv)

|

Paper (Arxiv)

|

Dataset Usage

|

Dataset Usage

|

Source Code

|

Source Code

|

Dataset (134.8 GB)

Dataset (134.8 GB)

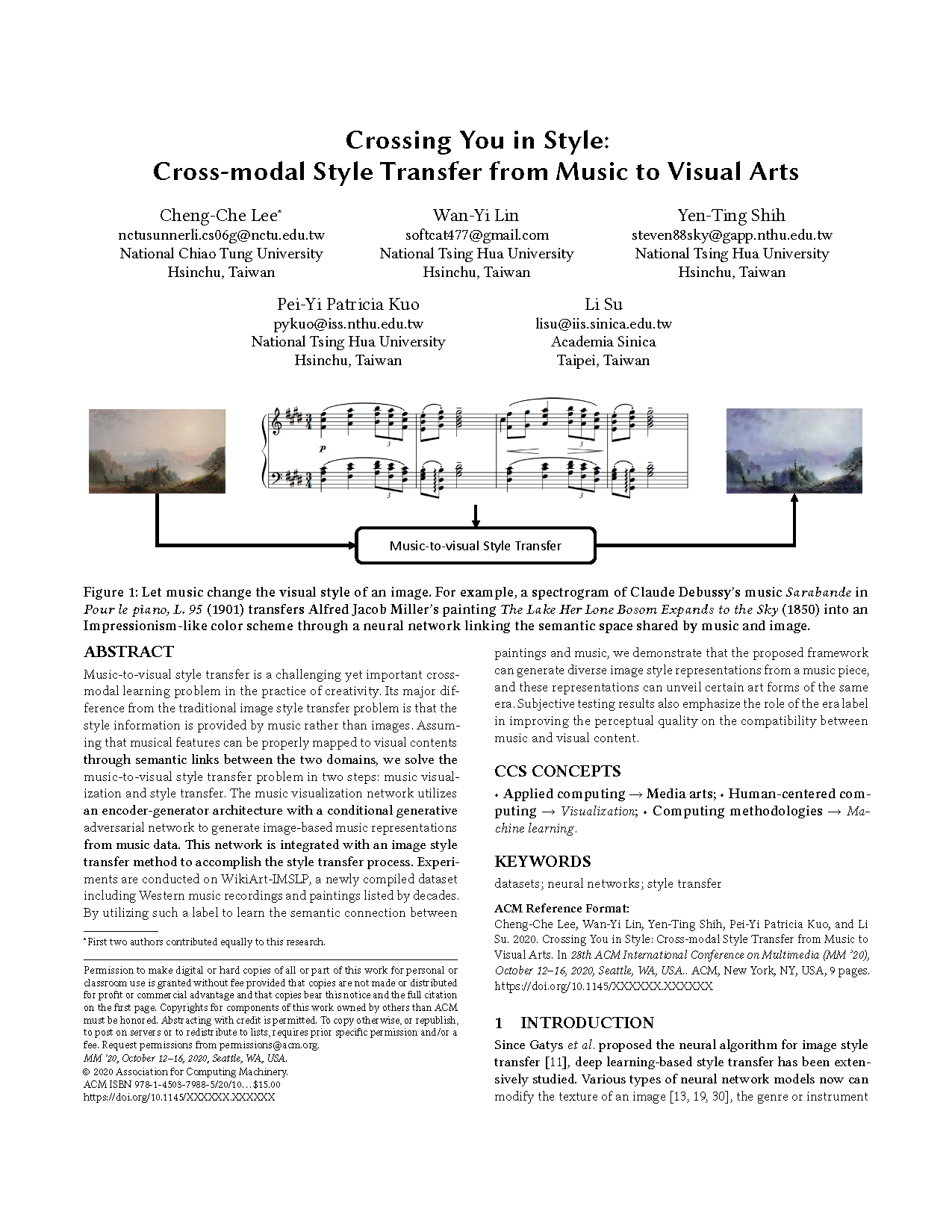

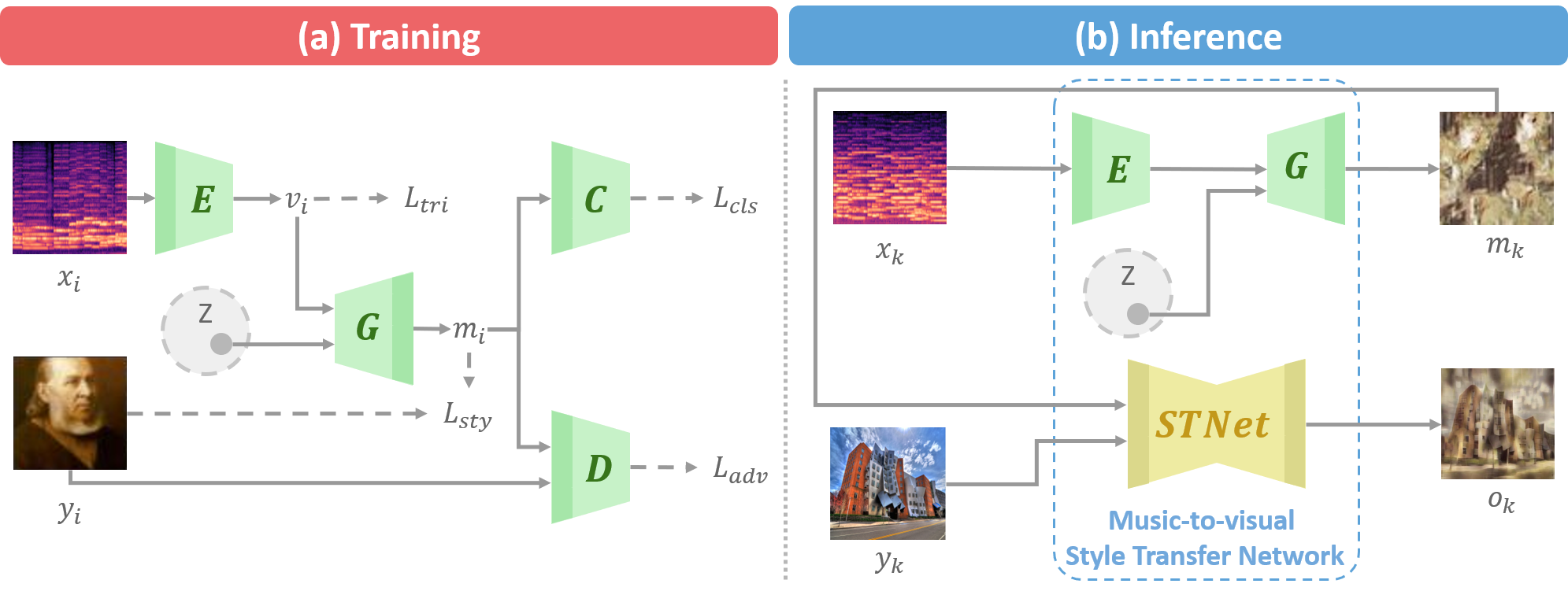

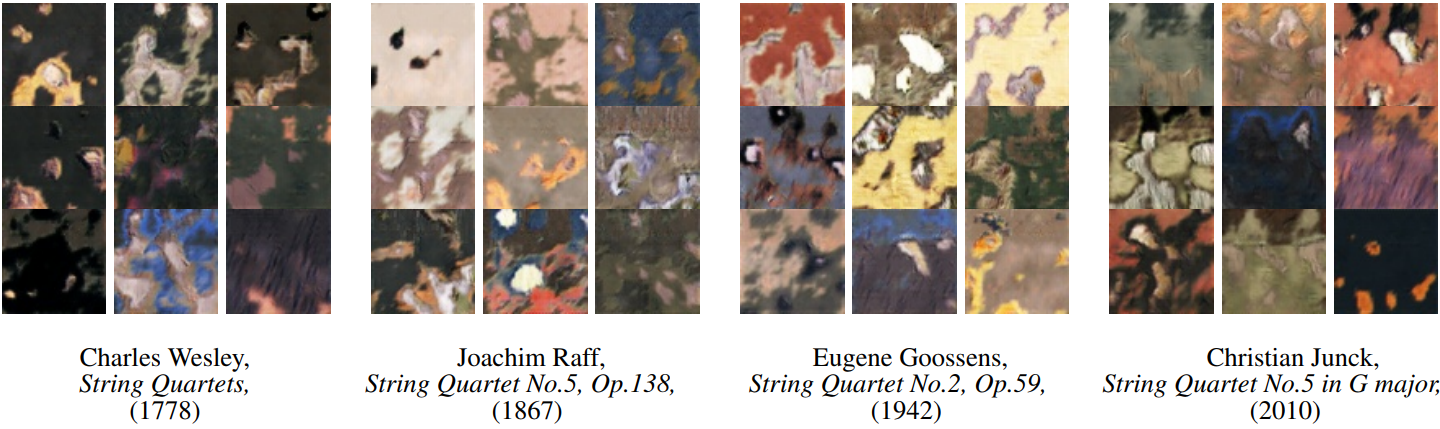

Music-to-visual style transfer is a challenging yet important cross-modal learning problem in the practice of creativity. Its major difference from the traditional image style transfer problem is that the style information is provided by music rather than images. Assuming that musical features can be properly mapped to visual contents through semantic links between the two domains, we solve the music-to-visual style transfer problem in two steps: music visualization and style transfer. The music visualization network utilizes an encoder-generator architecture with a conditional generative adversarial network to generate image-based music representations from music data. This network is integrated with an image style transfer method to accomplish the style transfer process. Experiments are conducted on WikiArt-IMSLP, a newly compiled dataset including Western music recordings and paintings listed by decades. By utilizing such a label to learn the semantic connection between paintings and music, we demonstrate that the proposed framework can generate diverse image style representations from a music piece, and these representations can unveil certain art forms of the same era. Subjective testing results also emphasize the role of the era label in improving the perceptual quality on the compatibility between music and visual content.

Cheng-Che Lee*, Wan-Yi Lin*, Yen-Ting Shih, Pei-Yi Patricia Kuo, and Li Su, "Crossing You in Style: Cross-modal Style Transfer from Music to Visual Arts", in ACM International Conference on Multimedia, 2020.

* indicates equal contributions

@inproceedings{lee2020crossing,

title={Crossing You in Style: Cross-modal Style Transfer from Music to Visual Arts},

author={Lee, Cheng-Che and Lin, Wan-Yi and Shih, Yen-Ting and Kuo, Pei-Yi and Su, Li},

booktitle={Proceedings of the 28th ACM International Conference on Multimedia},

pages={3219--3227},

year={2020}

}

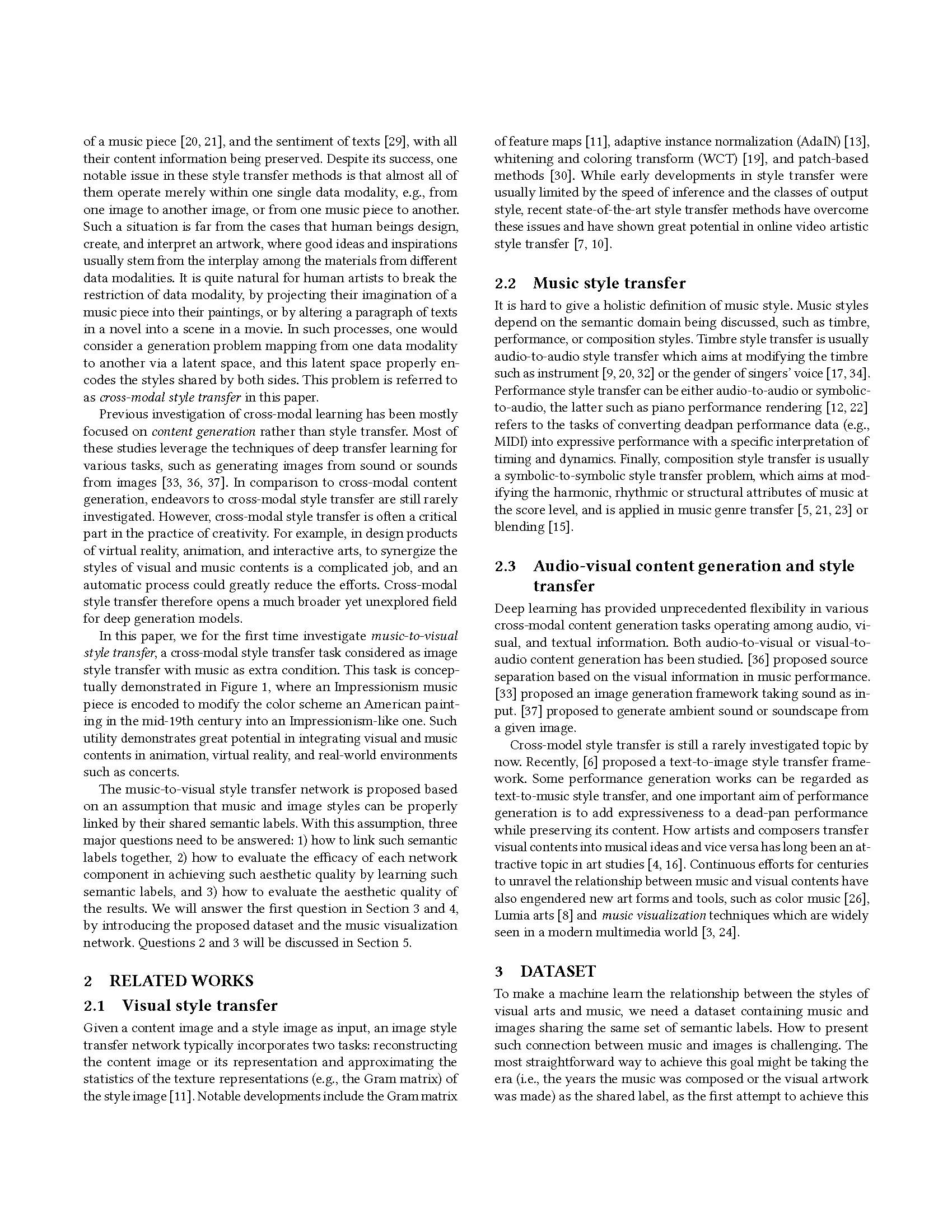

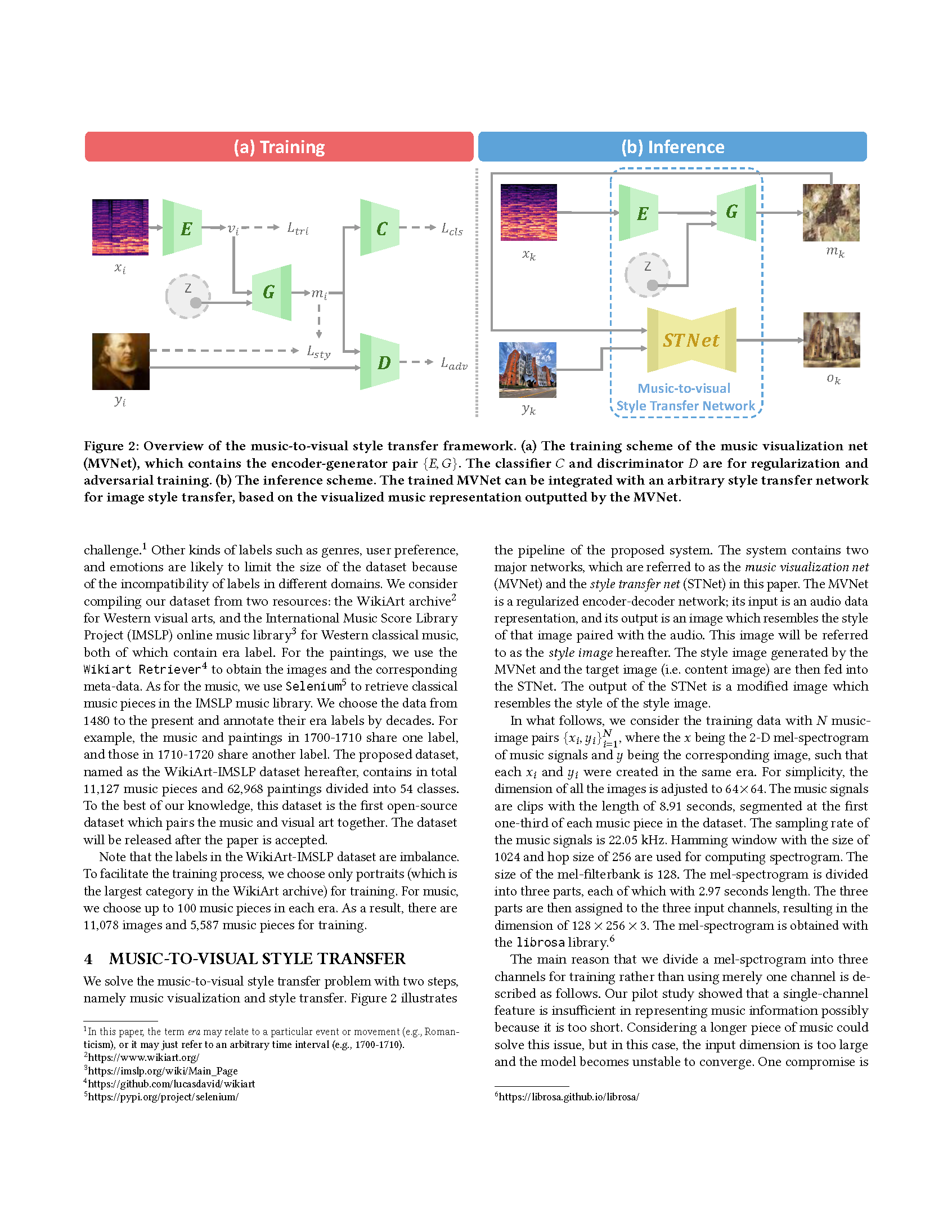

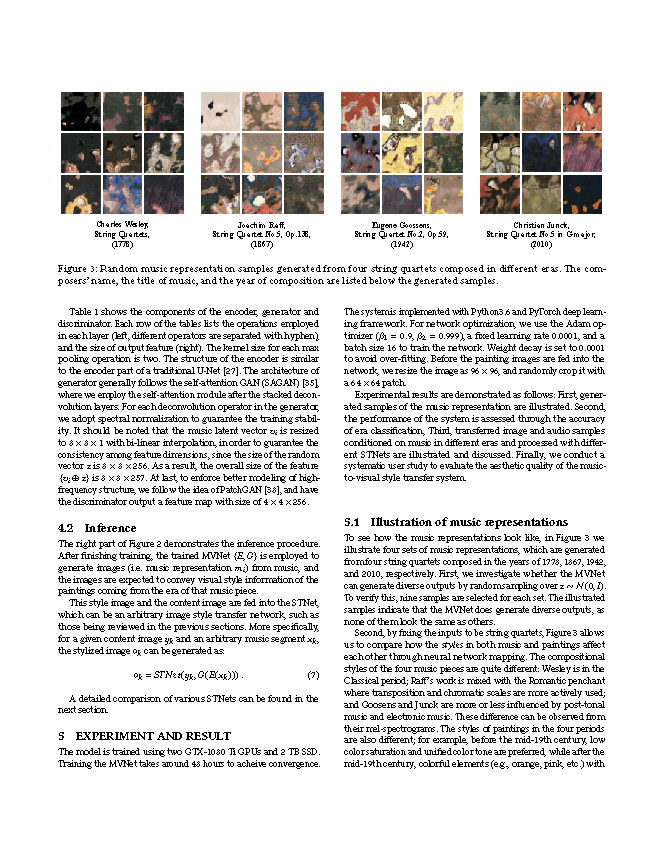

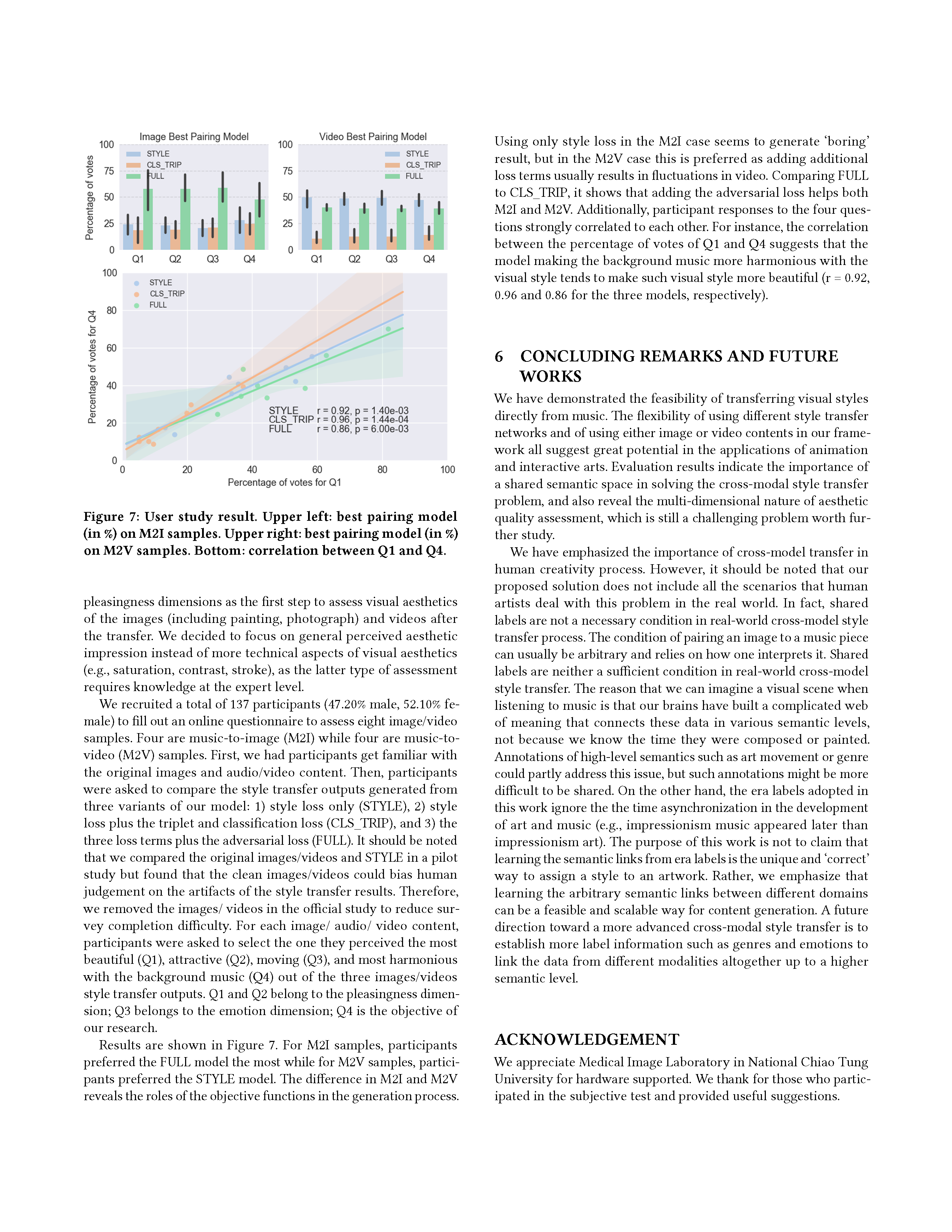

Overview. We describe the novel networks in our model to achieve music-to-visual style transfer. In Panel (a), the training scheme of the music visualization net (MVNet), which contains the encoder-generator pair {E,G}. The classifier C and discriminator D are for regularization and adversarial training. In Panel (b), the inference scheme. The trained MVNet can be integrated with an arbitrary style transfer network for image style transfer, based on the visualized music representation outputted by the MVNet.

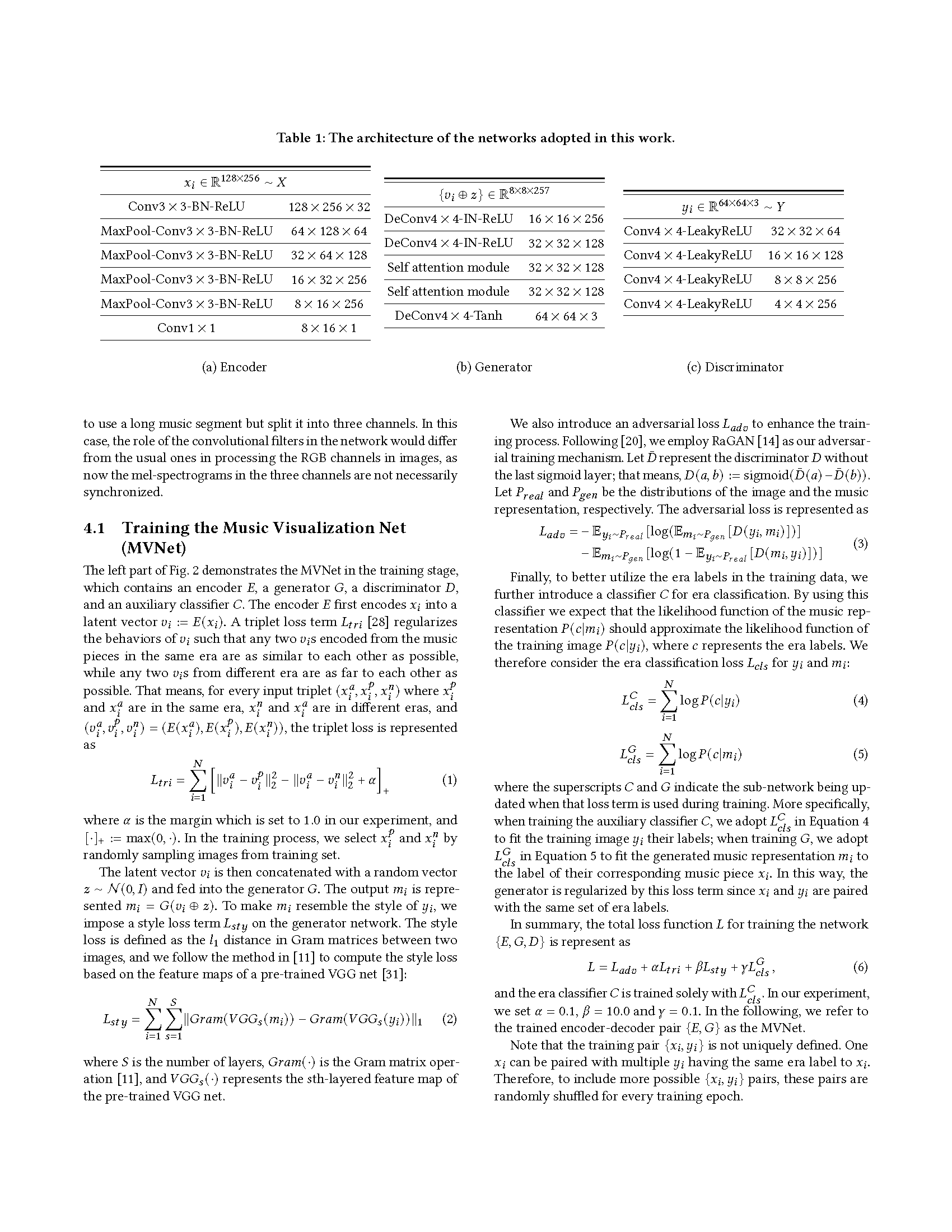

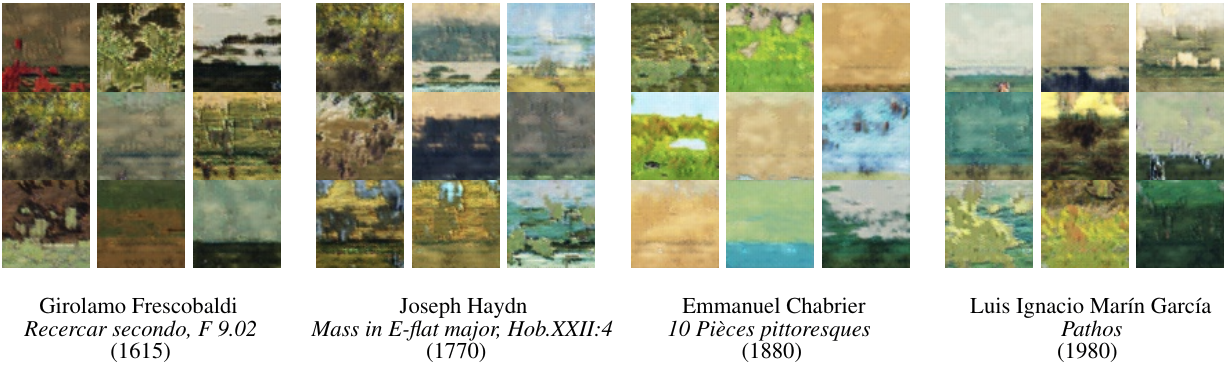

Random sampling result of music representation for the given sample. The description below the pictures are themeta-data. The first line of meta-data is the performance name, the performer are listed in the second row, and year are drafted in the last row. For each grid, the same music fragment but different random vector are used to generate the music representations. Our model can produce the diverse music repretation for the given music fragment. The painting we select to train is in the portrait category (upper) and the landscape category (lower).

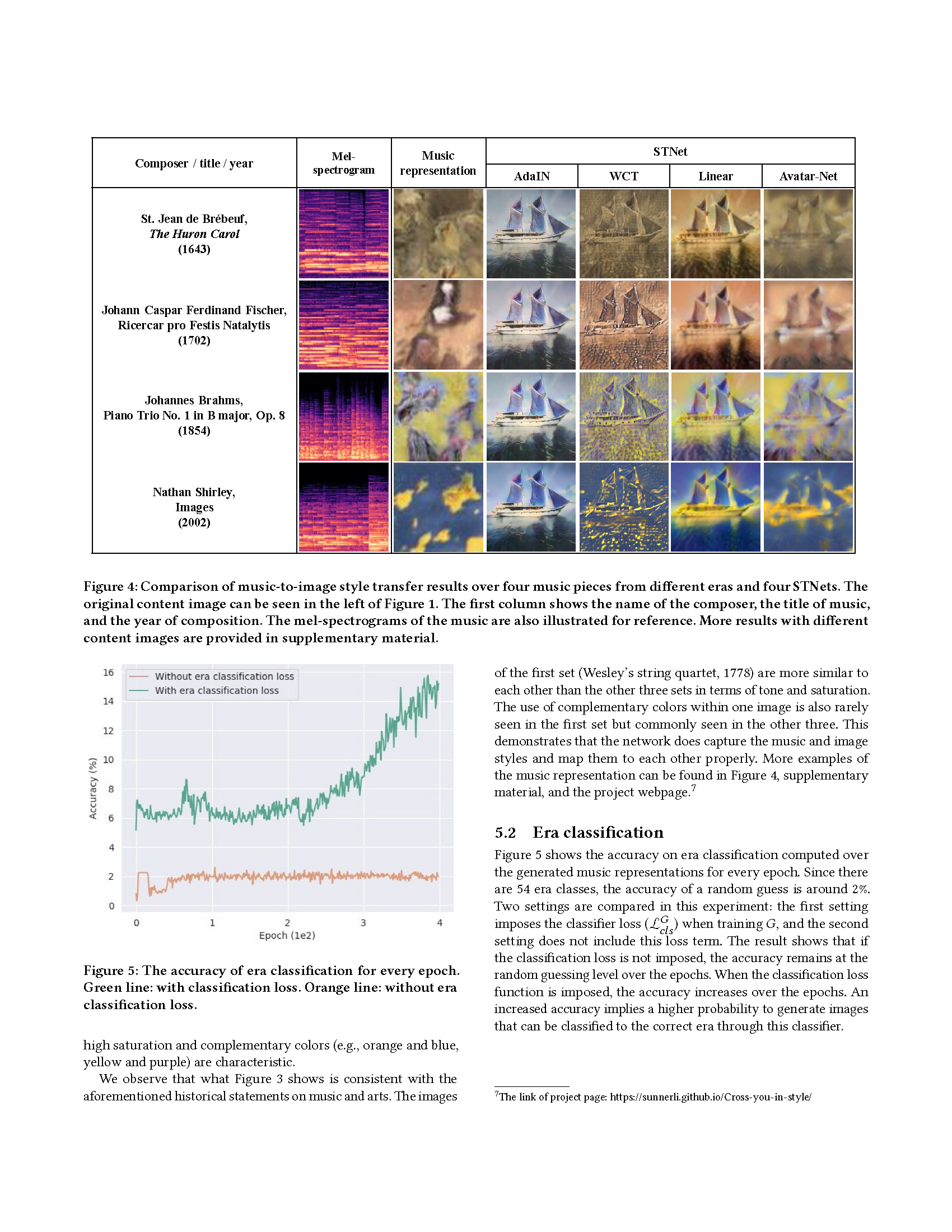

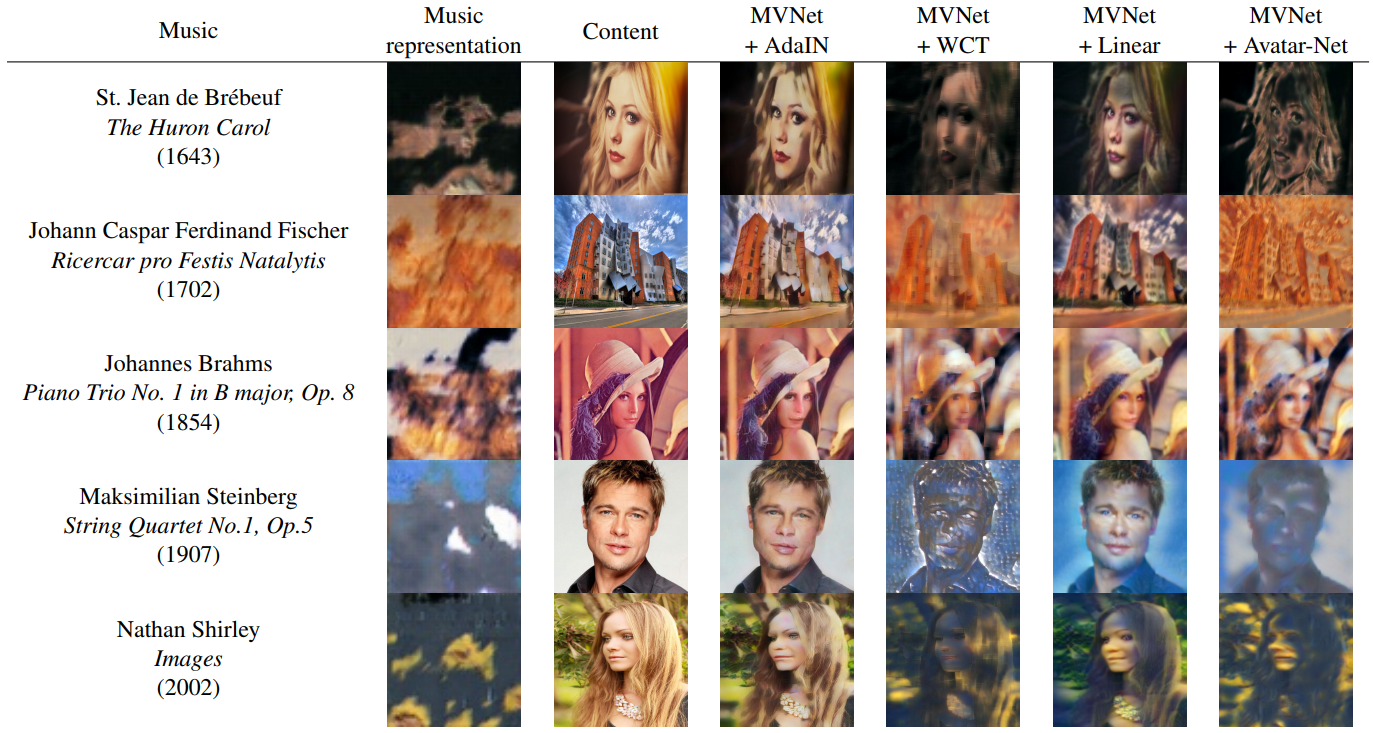

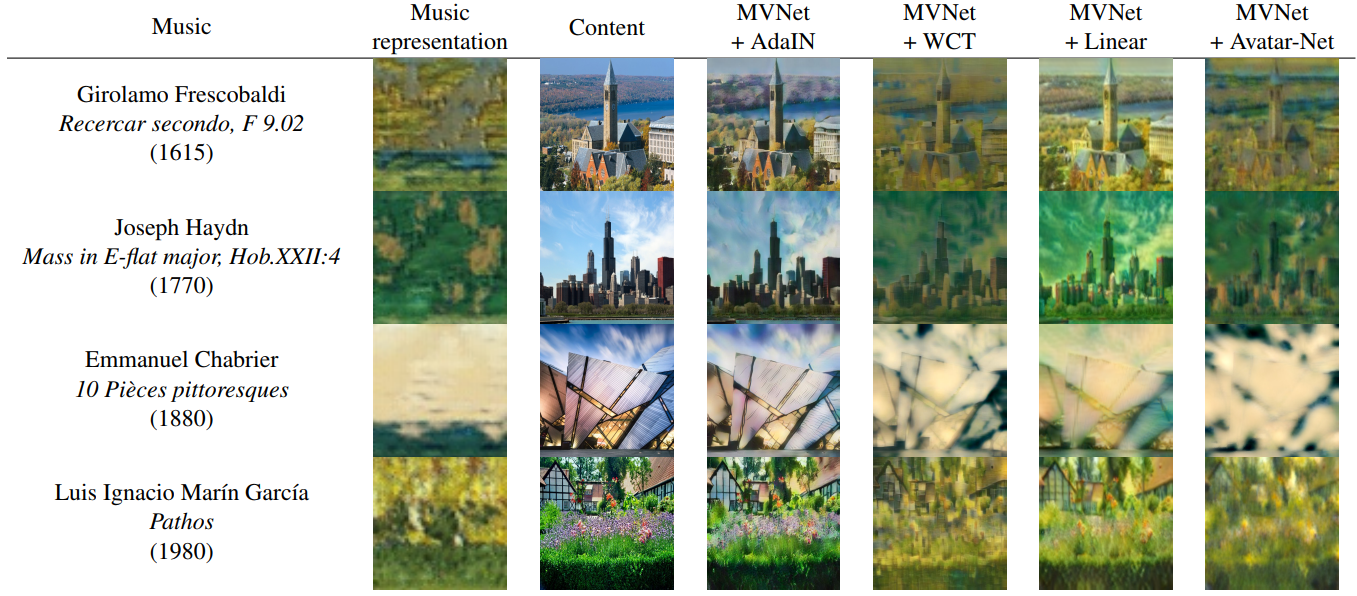

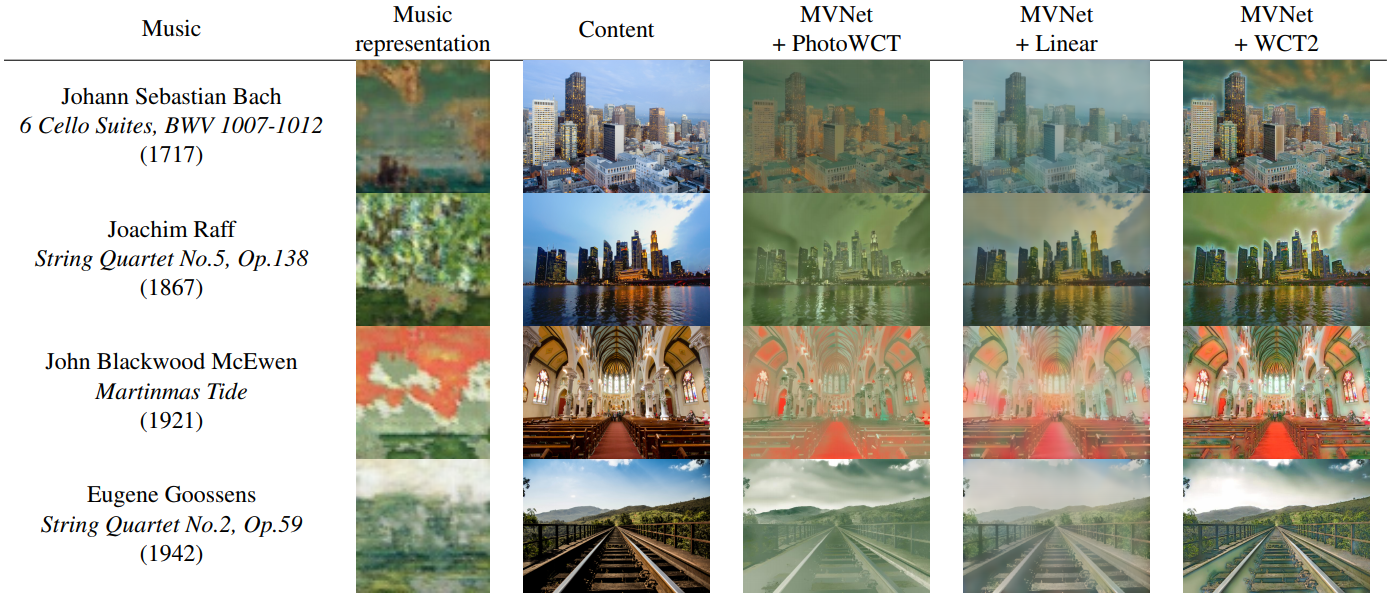

Example of music-based style transfer results toward the different content image. The first row in the music name cell is the name of the performance, following by the author which quote by the brackets. The last row in the music name cell is the performance year. The music representation are randomly generated which condition on the given performance. By altering with different image style transfer approach, our system can produce the rendered result via input music. The painting we select to train is in the portrait category (upper) and the landscape category (lower).

| 1643 | 1702 | 1853 | 1907 | 2002 |

| 1615 | 1770 | 1880 | 1980 |

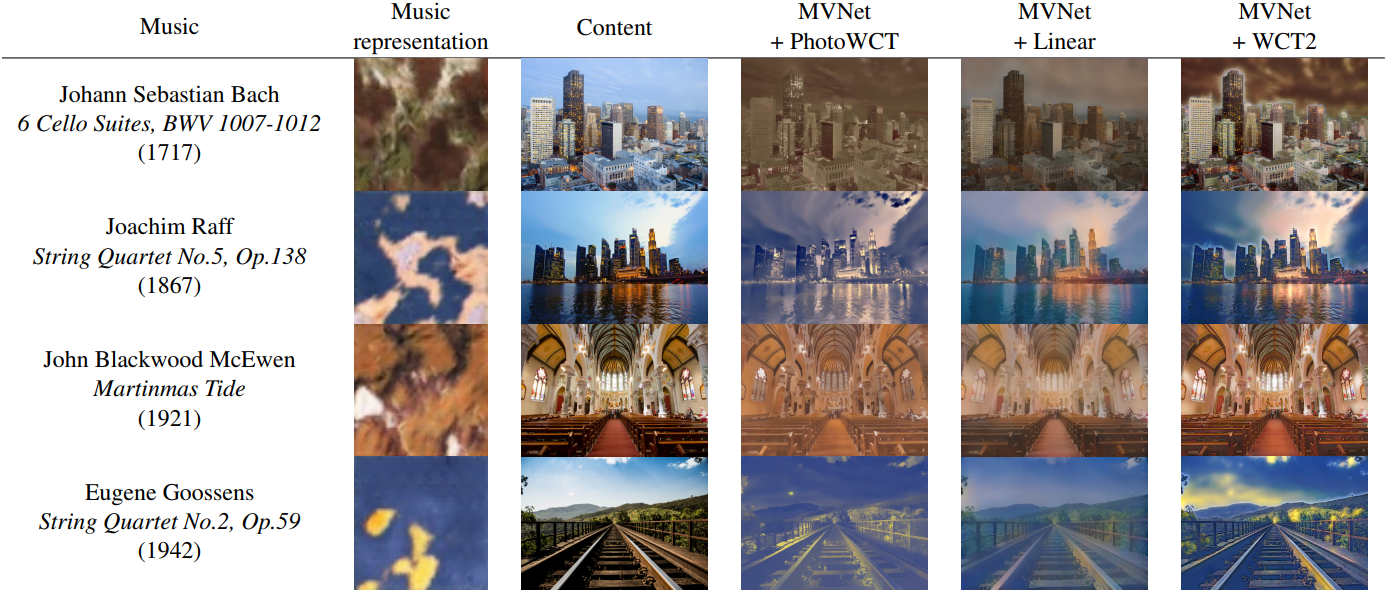

More example music-based photo-realistic style transfer result. Please note that the test content image and style music are never observed by our network during training. We show that our framework can also adaptive with photo-realistic while still preserving content. The segment of music is randomly selected.

| 1717 | 1867 | 1921 | 1942 |

Example of dymanic music-based style transfer. We demonstrate the effect toward different music genres, including symphony, piano solo and chamber music. Our model can produce different color-tone with different music genres, and fine-tune the individual visual effect for the videos.